Assemble Your Own LLM Application through Streamlit and Prompt Engineering Tactics

Table of contents

- Objective

- What is sentiment analysis?

- What is Language Translator ?

- What is Speech Synthesis

- What's a Table Q&A System?

- What is Summarization?

- What is Question and Answering System?

- What is Prompt Engineering ?

- Install the Needed Libraries

- Create an OpenAI function to use the API

- Sentiment Analysis Coding Part

- Language Translator Part

- Speech Synthesis

- Summary

Objective

We are going to build an amazing LLM application with a dynamic, interactive UI featuring awesome animations for NLP tasks such as sentiment analysis, language translation, speech synthesis, summarization, table question and answering systems, and finally, a question and answering system. At the end of this project, you will have an understanding of how to build an LLM project and how to overcome the major problems faced in an application while working with ChatGPT 3.5 Turbo

What is sentiment analysis?

Sentiment analysis, also known as opinion mining, is like reading people's online diaries and figuring out whether they're happy, sad, angry, or neutral about the things they're writing about. It uses fancy computer algorithms to scan text - like tweets or product reviews - and figure out the emotions behind them.

In Technical Term

Sentiment analysis is a technique used in natural language processing that determines the emotional tone behind words to gain an understanding of the attitudes, opinions, and emotions expressed within an online mention. It is often used by businesses to detect sentiment in social data, gauge brand reputation, and understand customer experiences.

Where is Sentiment Analysis Utilized?

This technique is used in many different areas. Marketers use it to understand how people feel about their product or brand, so they can improve it or respond to criticism. News organizations can use it to gauge public opinion on certain topics. Even politicians can use it to understand public sentiment on policies or events. Overall, it's a powerful tool for anyone who needs to understand public opinion in the Digital Age

We'll use sentiment analysis to learn more about what our users think of our LLM APP. Sounds Good, right?

What is Language Translator ?

In simpler, more humorous terms, a language translator is like your multilingual friend who helps you understand what people are saying in a language you don't speak. He takes words from one language, and like a skilled juggler, tosses them around and presents them back to you in a language you understand.

In Technical Term

A language translator is a tool or software that converts text or speech from one language to another. It uses algorithms and machine learning techniques to recognize and translate languages.

Where Do We Use Language Translators?

Language translators are used in a variety of sectors. They're used in business for translating documents, websites, and communication with international clients. In the travel industry, they help tourists communicate in foreign countries. In the technology sector, they're used to make apps and websites accessible to users worldwide.

We're going to learn how to create a language translator that can handle over 50 text conversions using prompt methods.

What is Speech Synthesis

Think of speech synthesis as a robotic storyteller. You give it a piece of text, and it reads it out loud to you, just like a parent reading a bedtime story, but with less emotion and more monotone. It's like your electronic devices have learned to talk and now they won't stop reading everything they see!

In Technical Term

Speech synthesis, often referred to as text-to-speech (TTS), is a type of technology that reads digital text aloud. It transforms the written words into spoken words, giving your devices the power to speak.

Where Do We Use Speech Synthesis ?

Speech synthesis is widely used in various sectors. It's used in technology to enable devices to read out information to users, in education for reading books or texts to students, in navigation systems to provide auditory directions, and in accessibility tools to assist individuals with visual impairments.

We'll learn how to use the Google GTTS library to turn text into speech in an audio file.

What's a Table Q&A System?

A Table question and answering system is like a knowledgeable librarian who knows exactly where to find specific information in a huge table full of data. You ask a question, and it quickly finds the answer for you from the table, like a game of hide and seek!

In Technical Term

In a table question and answering system is a type of AI program that extracts answers to queries from structured data tables. It uses natural language processing and machine learning algorithms to understand the question and find the relevant answer from the table

Where is table question and system Utilized ?

A table question and answering systems are commonly used in data analysis, business intelligence, and decision-making processes. They help in quickly extracting insights from large datasets in tabular form

We're going to dive into exciting prompting techniques to extract the exact information needed for user prompts

What is Summarization?

Summarization is like a movie trailer. It gives you the highlights and main ideas without making you sit through the whole thing. It's a way of saying, "Here's the big picture, without all the tiny details."

In Technical Term

Summarization is the process of shortening a set of data computationally, to create a subset (the 'summary') that represents the most important or relevant information within the original content.

Where is Summarization Utilized ?

Summarization is used in many fields, including data analysis, news, and report writing. It's also used in machine learning to condense large datasets into something more manageable.

Isn't it fascinating how we can condense user-provided content into a specific word limit?

What is Question and Answering System?

A question and answering system, similar to a digital detective, uses artificial intelligence to 'investigate' or search through a vast amount of information to find the most relevant and accurate answer to your question. Just as a detective collects clues and pieces them together to solve a mystery, this system sifts through data to find the 'clues' that will provide the best response to your query.

In Technical Term

A question and answering system is an AI-driven system that uses natural language processing to understand a user's question and provide an accurate, relevant answer by searching through a pre-defined source of information.

Where are Question and Answering Systems used?

Question and answering systems are widely used in various sectors. They are used in customer service as chatbots to answer customer queries, in education as digital tutors, in healthcare for diagnosing diseases based on symptoms, and in many other fields where quick and accurate information retrieval is necessary.

We will use a prompting technique to direct an AI application's thought process in order to efficiently find answers to user questions.

What is Prompt Engineering ?

Prompt engineering is like a teacher guiding a student on what to focus on during an assignment. The teacher gives clear instructions, and the student follows them to get the right answer.

In Technical Term

Prompt engineering is a method in natural language processing where prompts are designed to guide an AI model's response in a particular way.

In this case, the AI Model is like a student, and you are the teacher giving clear instructions to achieve the desired result using prompts.

Where is Prompt Engineering Used?

Prompt engineering is used in various AI applications, like chatbots, virtual assistants, and automated writing tools, to improve their performance and make their responses more useful.

We'll apply prompt engineering methods to direct the LLM Model, in this case, ChatGPT, to give us the results we want.

We've explored all the theories, but now, don't you think it's time to dive into the exciting part – the world of coding? Let's start!

Install the Needed Libraries

streamlit

textblob

streamlit-option-menu

streamlit-extras

streamlit-lottie

gtts

pandas

python-dotenv

langchain

pymongo

streamlit-chat

langchain

openai

unstructured[all-docs]

langchain_openai

pinecone-client

python-dotenv

streamlit-option-menu

Streamlit: It's a library used to quickly create interactive web applications for your Python scripts.

Textblob: It's used for processing textual data, providing simple API for diving into common NLP tasks.

Streamlit-option-menu: It's a custom component for Streamlit, used to create interactive option menus in your app.

Streamlit-extras: This provides extra functionalities to enhance your Streamlit applications.

Streamlit-lottie: It's used to render animations in your Streamlit apps using Lottie files.

GTTS: Stands for Google Text-to-Speech, used to convert text into speech(Audio file).

Pandas: It's a data manipulation library in Python, used for data cleaning, transformation, and analysis.

Python-dotenv: It's used for handling environment variables, useful in maintaining confidential data like API keys.

Langchain: This library is used for LLM Integration.

Pymongo: It's a Python driver for MongoDB, used for accessing and manipulating data stored in MongoDB.

OpenAI: It's an API for accessing OpenAI models like GPT-3 for various NLP tasks.

Unstructured[all-docs]: This package provides tools for working with unstructured data.

Langchain_openai: It's used to connect Langchain with OpenAI for tasks.

Pinecone-client: This is a client for Pinecone, a vector database service for machine learning applications.

Create an OpenAI function to use the API

import openai

import os

from dotenv import load_dotenv, find_dotenv

class OPEN_AI:

def get_completion(self,message=None,temperature=0,model='gpt-3.5-turbo'):

api = os.getenv('OPENAI_API_KEY')

client = openai.OpenAI(api_key=api)

response = client.chat.completions.create(

model = model,

messages = message,

temperature = temperature

)

return response.choices[0].message.content

This code defines an OPEN_AI class with a method, get_completion, that uses OpenAI's GPT-3.5-turbo model to generate text completions. It reads the API key from an environment file, sends a request to the OpenAI API, and returns the first generated completion.

model: Specifies the AI model used, in this case 'gpt-3.5-turbo'.messages: The input text that the model responds to.temperature: Controls the randomness of the model's output. Higher values produce more random results (model creativity in outcome , higher value leads to hallucination).

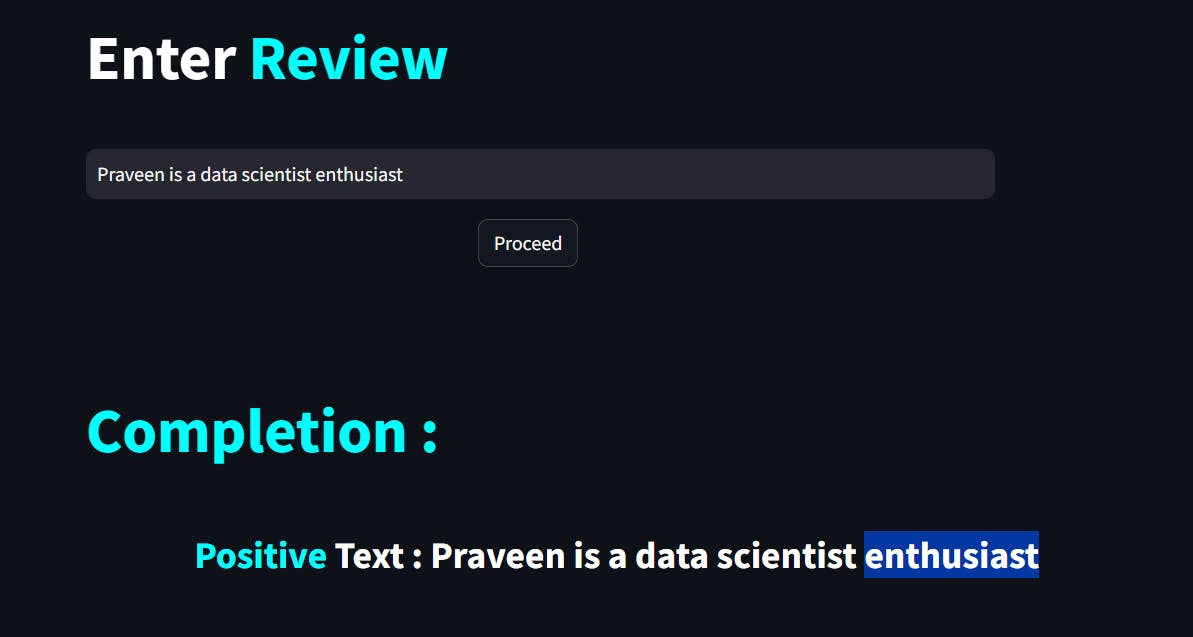

Sentiment Analysis Coding Part

Text = st.text_input("")

prompt = f"""

Instruction :

1. Find What is the sentiment of the following Text,\

which is delimited with triple backticks?

2. Give your answer as a single word, either "Positive" or "Negative" or "Neutral

Text: '''{Text}'''

"""

In this code snippet, the st.text_input("") function is used to get text input from the user from the streamlit UI. The prompt variable is a string that contains the instructions and examples for the AI model. The examples are used for few-shot learning, enabling the model to understand the task. The instructions guide the model's thought process, acting as a chain of thought prompt. The input text from the user is then appended to the prompt and fed into the AI model to generate a response.

what is chain of thoughts?

Chain of thought prompting is a method used in natural language processing where prompts are designed to guide an AI model's response in a particular way. It involves creating a sequence of prompts that lead the model's thought process in a specific direction, like guiding a conversation towards a particular topic.

what is few shot learning ?

Few-shot learning is like teaching a kid to recognize animals. You don't need to show them every cat or dog in the world for them to learn what cats and dogs look like. You just show them a few examples, and they can recognize new ones they've never seen before. That's few-shot learning - learning from a few examples the model will learn what the user intention.

what is zero shot learning ?

In zero-shot inference, you directly ask the AI model a question without providing specific examples or reasoning steps. The model uses its pre-existing knowledge to generate an appropriate response. For example: How you ask questions to ChatGPT.

Leverage System Message

System and human messages guide an AI's response. System message gives instructions, human message is the user's input. This helps AI generate context-aware responses, useful for chatbots and virtual assistants that need conversation flow.

system_message = {'role':'system','content':'Provide your own sys message'}

human_message = {'role':'user','content':f'{prompt}'}

message = [system_message,human_message]

sentiment = response.get_completion(message=message)

This code interacts with an AI model for sentiment analysis. The 'system_message' sets instructions for the AI, the 'human_message' is the user's input. 'message' passes these to the AI. The model's response, a sentiment analysis, is stored in 'sentiment' variable .

Result of User Interface

You'll get a result that changes text color and more. Check my GitHub for examples.

Keep in mind: We'll use the same get_completion method for all tasks. From now on, just concentrate on changing the prompts for particular tasks.

Language Translator Part

text = col1.text_input(" ")

prompt = f"""

Instruction:

# Translate the following text to {your_target_lan}:

Text :{text}

"""

system_message = {'role':'system','content':'Provide your own sys message'}

human_message = {'role':'user','content':prompt}

message = [system_message,human_message]

response = response.get_completion(message=message)

This code snippet creates a language translation prompt in Streamlit, where the user inputs text to be translated. The 'system_message' sets instructions for the AI, and the 'human_message' is the user's input. The response from the AI model is a translated text.

Result of User Interface

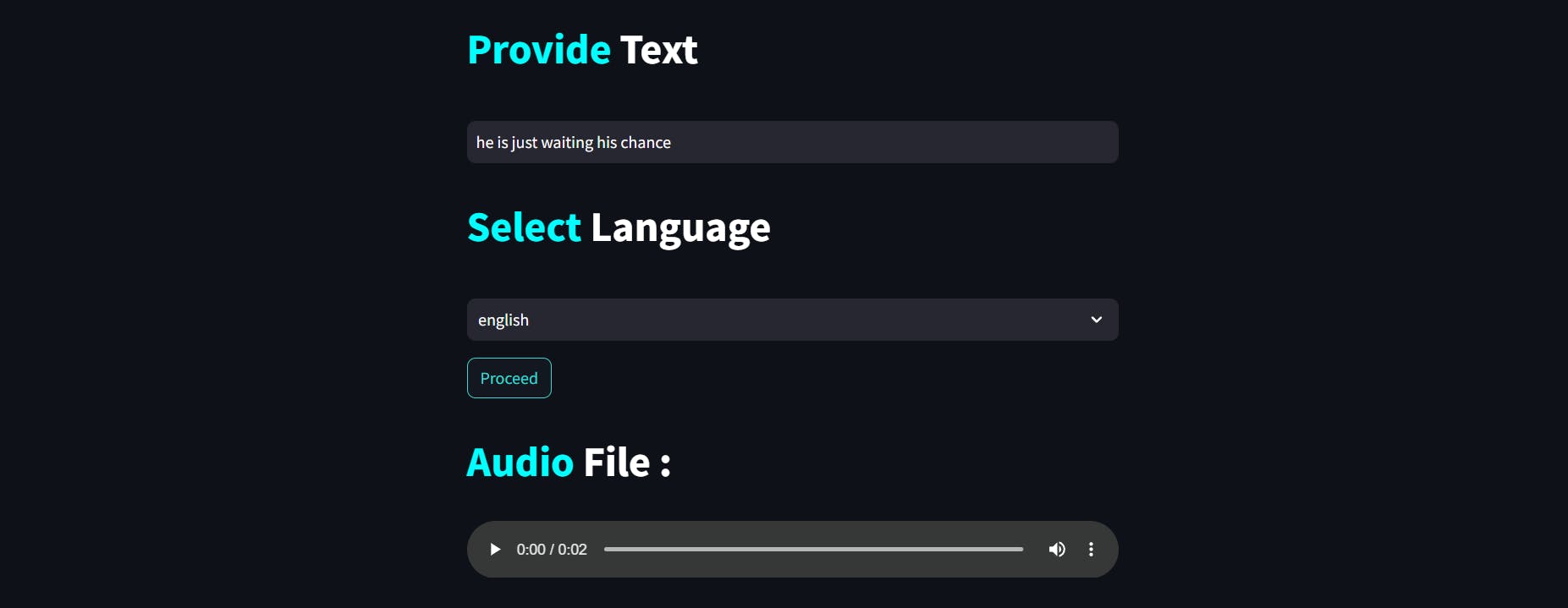

Speech Synthesis

if st.button('Proceed'):

# English Language Specified Here you can specify any langauge

language = 'en'

# Object Creation for gTTS Class

text_to_speech = gTTS(text=input, lang=language)

# save your output

This code snippet creates a text-to-speech conversion system in Streamlit. The user inputs text, and upon clicking 'Proceed', the Google Text-to-Speech (gTTS) library converts the text into an audio file named "output.mp3". The audio file is then played back to the user.

Result of User Interface

Note: For summarization, table QA, and QA system explanations, check out this blog.

Summary

This article provides a comprehensive guide to building a Language Learning Model (LLM) application with a dynamic, interactive UI. The application features NLP tasks including sentiment analysis, language translation, speech synthesis, summarization, table question and answering systems, and a question and answering system using ChatGPT 3.5 Turbo. I have explained each task, its technical aspects, and its real-world applications. The guide also delves into prompt engineering and how it is used to direct an AI model's response. The coding part of the article demonstrates how to install necessary libraries and create functions for each NLP task, with a focus on OpenAI's GPT-3.5-turbo model for text generations.